Processamento de Imagem e Visão

Project 1 Calibrate

the Kinect

Objectives

The input data

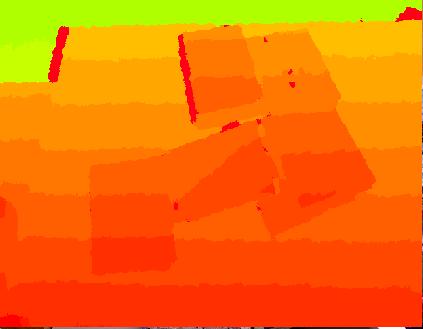

"depth" image |

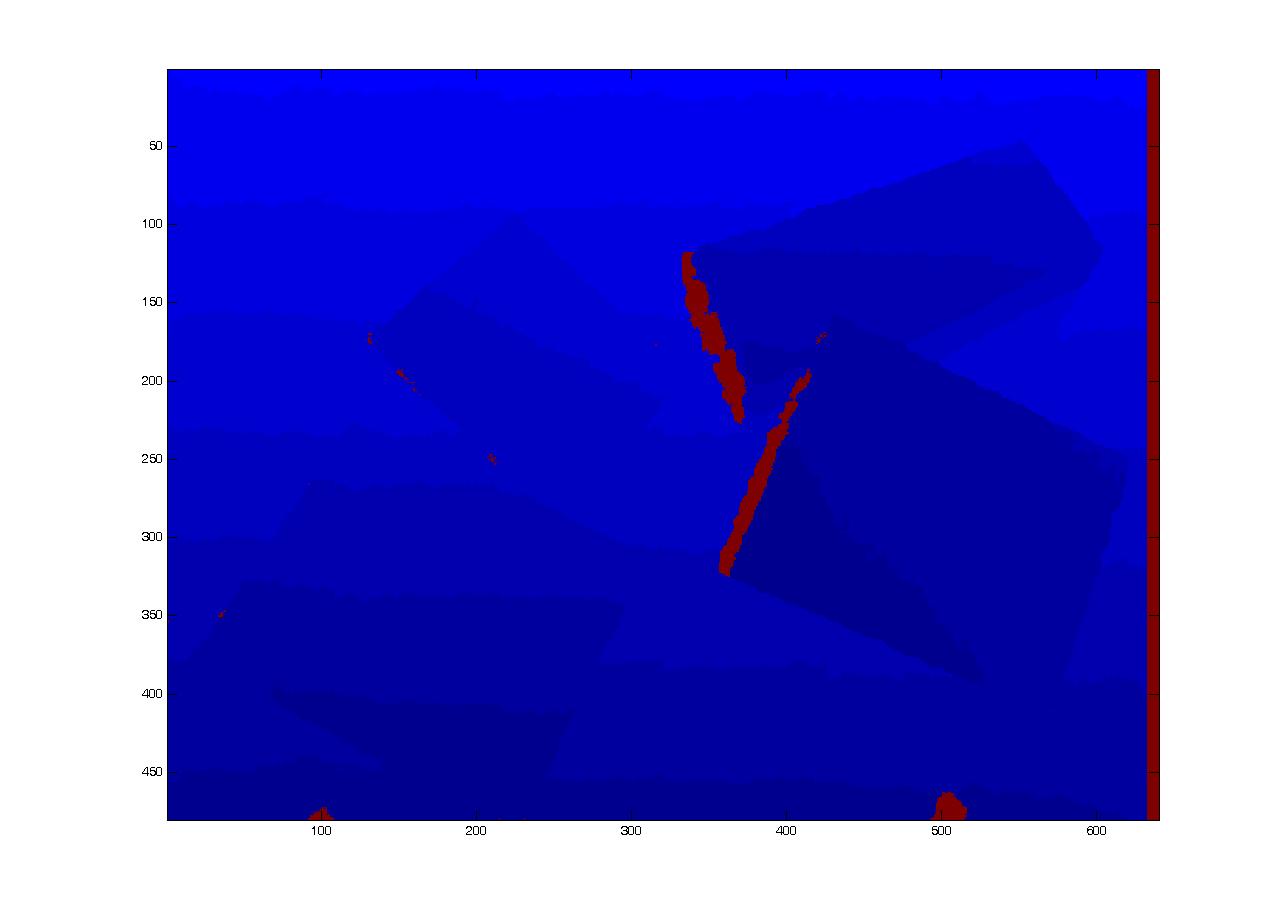

Infrared image IR |

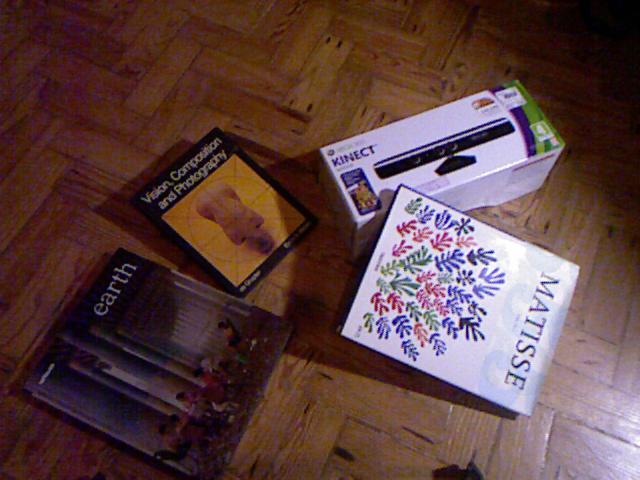

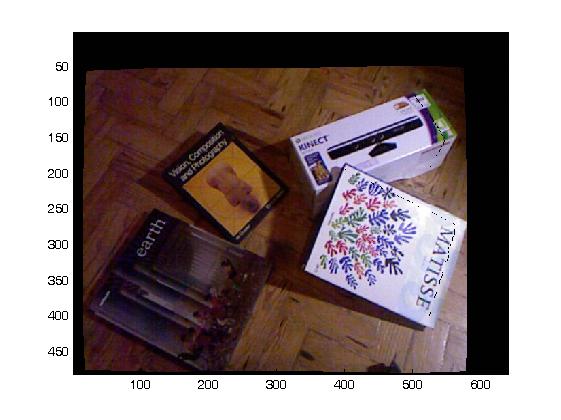

Color image RGB |

| depth[i][j] | 480x640 array of unsigned integers (32 bits) with values between 0-2047 (values of 2047 indicate "invalid measurement" and should not be used. You can discard or interpolate!).Pixels in the last column(s) (depth(:,640) are unreliable). |

| IR[i][j] | 480x640 array of unsigned int (8 bits) with values ranging from 0-255. It is an infrared image (gray scale matlab image!) from the same camera as the depth image. In other words, depth and IR superimpose all pixels |

| rgb[m,n,k] | 480x640x3 array of unsigned int (8 bits) with values ranging from 0-255. It is a regular color image captured from the second kinect camera |

| xyz | Nx3 array of doubles - the 3D coordinates of points from a known object in a particular (arbitrary) reference frame |

| xy-ir | Nx2 array of doubles - the 2D image coordinates corresponding to the 3D points. [xy-ir(K,1) xy-ir(K,2)] is the image location of point K with 3D coordinates [xyz(K,1) xyz(K,2) xyz(K,3)]. |

| xy-rgb | the same as xy-ir but for the rgb image |

| params | a 2x1 array with depth camera parameters: params(1) = alfa, params(2)= beta. Remember Z_i = 1/(alfa *d_i +beta) but Z_i must be in depth-camera reference frame (not in object reference frame). |

xyz, xy-ir and xy-rgb may contain outliers!

OUTPUT

| d_cam | is a structure that

contains the camera parameters: d_cam.K -> a 3x3 matrix representing the depth camera parameters (K-intrinsics ) - Extrinsic parameters are assumed K[I|0]. d_cam.alfa -> double with the estimated value for alfa. d_cam.beta -> double with the estimated value for beta |

| rgb_cam | is a

structure with the rgb

camera parameters (K, R, t ).

Extrinsic parameters are relative to the

depth camera: rgb_cam.K - a 3x3 matrix with the rgb camera intrinsic parameters rgb_cam.R - A rotation matrix representing the rotation from depth_camera -> rgb camera, rgb_cam.t - A translation vector representing the translation from depth camera -> rgb camera. Notice the change: R and T are such that: |

| d_xyz | is

a matrix of 307200 x 3 elements where d_xyz(k,:) are the X,Y,Z

coordinates of each point of the depth image depth(i,j) note that [k= (j-1)*480 + j] |

| rgb2depth | a 480x640x3 array (image) of unsigned int (uint8) where rgb2depth(i,j,:) contain the correspondent RGB value for each pixel of the depth image depth(i,j). see example below. values outside bounds should be 0 (some pixels of the depth image map outside the rrgb image). |

| Extra credit | A bonus will be awarded for optional parameters in d_cam d_cam.alfa and d_cam.beta, the "depth parameters" |

| depth= |  |

rgb= |  |

| rgb_cam= |  |

typing

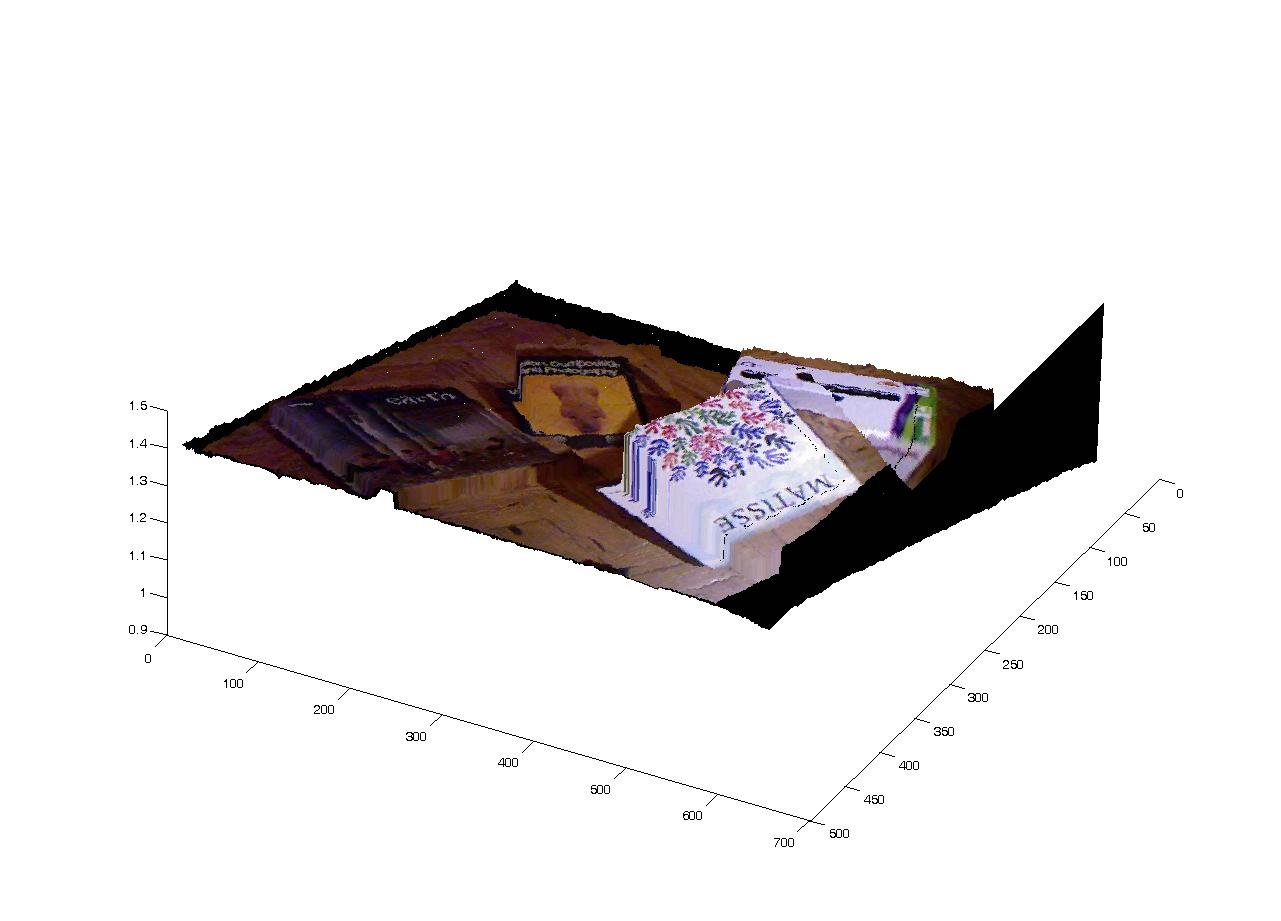

the matlab command: warp(reshape(1./d_xyz(:,3),480,640))= should produce the 3D graphics notice that this kinect was poorly calibrated since the image of the book is mapped on the flloor ! That is how I'm going to check if your software works well :-) |

|

[d_cam, rgb_cam, d_xyz, rgb2depth]=pivproject1(depth,IR,rgb,xyz,xy-ir,xy-rgb,params)

All other functions must be include in the file. You only submit one single file!

The merit will be based on performance (least error). Therefore you are encouraged to use any technique or method that enables you to collect more data from the images in order to increase precision. You can use any software you wish as long as you include one single matlab file (NO SCRIPTS, ONLY FUNCTIONS)!

Consider also that several datasets -input data - can be supplied (a sequence of images instead of one single triple -depth,ir,rgb). This will be implemented using cell arrays (ex : depth_cell={depth1(:,:),depth2(:,). ....}. We will talk about this in the lab!

|

|

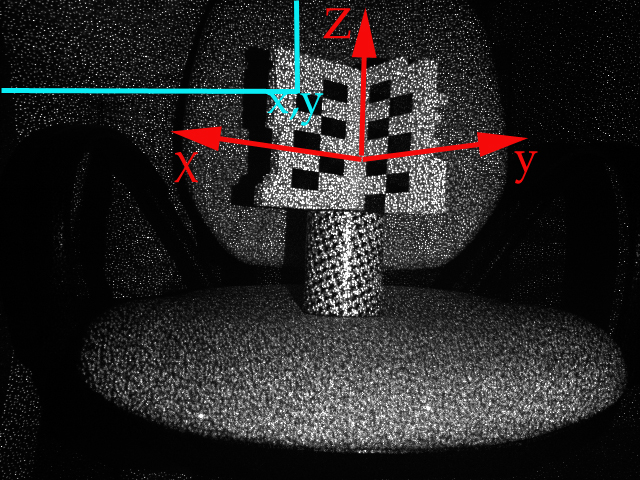

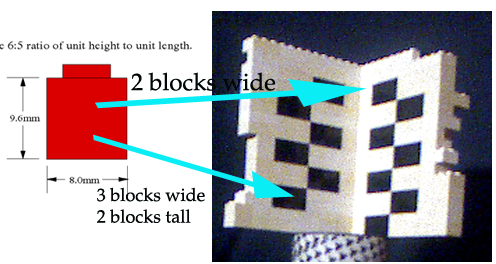

Dimensions of the unit block. The small white area is 2 blocks wide and the black block is 3 wide x2 tall